Scope of the survey

The aim of the task 8.4 “Quality Assessment for All” within the EURAXESS TOPIII project is to develop a set of quality assessment methods and tools for the network and improve the way statistics are being submitted to and processed by the European Commission. To map and analyze the existing practices of quality assessment within the EURAXESS network as well as to identify the most crucial bottlenecks in current data collecting, we have conducted a short online survey for both BHOs and Service Centers. In total, 104 responses from 38 countries were submitted. The highest number of responses per country was received from the following states: Spain (10), France (9), Germany (8), and Norway (7). This report sums up the responses received to each of the questions and brings attention the most common issues reported by the network members.

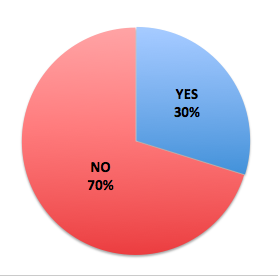

Question #1: Does your EURAXESS BHO/Service Center have any, written or unwritten, internal quality standards that set out the benchmarks of quality of your services?

got 31 positive (“yes”) and respectively 73 negative (“no”) responses;

got 31 positive (“yes”) and respectively 73 negative (“no”) responses;- several respondents named the EURAXESS Service Commitment (3), the European Charter and Code and its HR Strategy for Researchers particularly (4), as well as ISO 9001:2000 Certification (3) as their quality standard documents.

- a handful of respondents referred to the internal quality standards;

- some replied that their quality standards were either unwritten or not public.

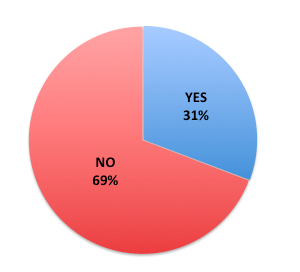

Question #2: Have you, as a BHO or Service Centre, ever conducted a customer satisfaction survey (in the form of a questionnaire, interview or whatsoever) to evaluate the quality of EURAXESS services you offer?

32 participants had at least once conducted a customer satisfaction survey to evaluate the quality of their services;

32 participants had at least once conducted a customer satisfaction survey to evaluate the quality of their services;- the most popular forms for satisfaction survey were online questionnaires and interviews with foreign researchers;

- some respondents attempted to evaluate customer satisfaction with the overall work of the service/welcome centers, while others would evaluate only particular services provided;

- most of the participants tend to evaluate the satisfaction of their target groups with particular events offered by EURAXESS: trainings, information days, workshops, and so forth;

- depending on the type of surveys, the frequency with which they are being conducted also varies: from occasional to annual or half yearly surveys.

Question #3: Which methods and/or tools do you use to evaluate the quality of your services and information delivered online, via your portal (e.g. focus group interviews, user journey surveys, Google Analytics, etc)?

- around 25% of all respondents replied that they used no tools to evaluate the quality of the services and information they delivered online;

- amongst the participants who responded positively approximately half had applied qualitative methods of evaluation, such as (online) questionnaires and surveys, (focus group) interviews, and online feedback forms;

- the other half used Google Analytics and other analogous tools to do quantitative measurements: number of clicks, page visit counts, broken links, and so forth;

- a few had combined both approaches.

Question #4: What are the main difficulties that you face when reporting statistics to the Commission?

One fourth of all participants reported no difficulties in reporting statistics to the European Commission. Problems and suggestions of the rest can be grouped as follows:

- a significant number of partners identified the absence of a unified way/tool of collecting information as one of the main issues they struggle with;

- another issue in data collecting is that different offices/department within an institution are often responsible for different sorts of services, and hence inquiries;

- the classification of inquiries has been mentioned as a quite frequent issue, too: the existing list of inquiry types provided doesn’t reflect the variety of questions being asked and/or is not congruent with the services offered in reality;

- the relevance of the data to the institutional needs: existing categories do not always correspond to the internal categories being used by an institution;

- the lack of analysis of the data submitted is another thing to consider and improve;

- in conjunction with the two previous points, many BHOs face problems motivating the members to submit statistics: the importance of the reporting is not understood as statistics are not being processed nor seem to serve the internal needs of the member institutions;

- many indicated that data collecting is perceived as a very time-consuming obligation for the network members.

To sum up, many respondents expressed their concern with the clarity (what to register), accuracy (submitted numbers in many cases should be taken just as a rough estimation) and hence quality of submitted statistics. For many EURAXESS members, it is not always feasible to register each and every question, especially then queries are received/answered orally, and even more so if there is no proper tool for that. Furthermore, the numbers of inquiries without accompanying explanations give little control over the veracity of the data and by no means reflect the quality of the services provided. Most of the members agreed that solely quantitative approach (counting the queries) doesn’t seem to be adequate in attempts to measure the quality of the services, e.g. the satisfaction and/or the rapidity of the services offered by the network.

THANK YOU FOR YOUR CONTRIBUTION!